As digital assistants and AI-based communication evolve rapidly, voice to voice AI is transforming how we interact with technology. Let’s dive into how this innovation is reshaping communication and impacting various industries.

The Evolution

It has advanced significantly over the years, thanks to improvements in voice recognition, deep learning, and artificial intelligence.

Early Developments: Voice Recognition Systems

Initially, research focused on helping machines understand human speech, leading to the development of early voice recognition systems. These early models laid the foundation for more advanced AI-driven voice interactions, making communication between humans and machines more seamless.

Advancements in Voice Synthesis

As technology progressed, voice synthesis improved, enabling machines to generate more human-like speech. The ability to convert text into voice was a major milestone, making AI-powered voice interactions more natural and fluid. Researchers refined machine learning models to enhance voice accuracy, resulting in more responsive and engaging AI-driven speech.

The Role of AI and Neural Networks

With the rise of AI and neural networks, real-time speech to speech conversion became possible. AI-powered systems could now not only recognize spoken words but also generate responses in a conversational, human-like manner. This led to the development of voice assistants and AI-driven voice translation, enabling smarter, more dynamic interactions.

The Impact of Large Language Models

With the integration of large language models (LLMs), voice AI has reached new levels of sophistication. These models enable real-time language translation and personalized AI conversations, expanding the technology’s applications across multiple industries.

What is Voice to Voice AI Technology?

It’s an advanced artificial intelligence technology that enables spoken words to be transformed into different voices—often in real-time. This is achieved through a combination of speech recognition, machine translation, and speech synthesis. The technology helps break down language barriers, enabling smooth communication between people who speak different languages.

How Does Voice to Voice AI Works?

Voice-to-voice AI operates through a sophisticated multimodal architecture that seamlessly processes speech inputs to generate human-like audio responses. The workflow begins with an Audio Encoder, such as a pretrained NEST-XL model, which transforms raw speech input into a high-dimensional representation with 616M parameters, enabling deep understanding of linguistic and acoustic features.

Audio representations are then mapped to a unified embedding space. The Input Audio Projector aligns the encoded speech data ,ensuring modalities are compatible for processing. This is followed by the Embedding Merge stage, where the AI combines these representations to create a comprehensive multimodal input for the Large Language Model (LLM). The LLM, trained on vast amounts of linguistic data, interprets the input, generates meaningful responses, and maintains contextual continuity across conversations.

Once the AI has determined an appropriate response, the Output Projector converts the LLM’s hidden states into speech token representations, ensuring the generated content remains natural and coherent. Finally, the Vocoder synthesizes human-like speech from these tokens, producing an audio output that closely resembles real human conversation. This entire process enables a voice-to-voice AI agent to take spoken input, comprehend its intent, generate a contextually accurate response, and deliver it as synthesized speech—creating a seamless, real-time conversational experience.

This fusion of audio processing and Agentic AI makes multimodal voice agents capable of handling complex interactions, multilingual conversations, and real-time responses, enhancing human-AI communication across various industries.

Pioneers in Voice-to-Voice AI

The earliest adopters of speech to speech AI were research institutions, tech innovators, and AI developers focused on speech recognition, synthesis, and real-time voice processing. Universities, AI research labs, and speech technology experts pioneered innovations in automatic speech recognition (ASR), text-to-speech (TTS), and neural voice synthesis.

By the late 2010s, deep learning and large language models had significantly enhanced capabilities, leading to real-time voice translation, AI-driven voice assistants, and more natural speech interactions. These breakthroughs paved the way for widespread adoption in mobile apps, customer service, and accessibility tools.

The Rise in Mobile Applications

the technology gained popularity in mobile apps in the late 2010s, as advancements in speech recognition, LLMs, and real-time voice synthesis improved. By 2020, mobile apps began integrating real-time voice translation, AI-driven voice assistants, and voice modulation for seamless communication. These innovations enabled multilingual conversations, personalized AI voices, and greater accessibility. Over time, improvements in AI processing and deep learning have made voice-to-voice interactions more natural, making them a key feature in mobile apps today.

Key Technologies Behind Voice AI

Automatic Speech Recognition (ASR) – Converts spoken language into text by analyzing phonemes, voice patterns, and accents. Used in transcription, virtual assistants, and voice commands.

Large Language Models (LLMs) – Enhance the accuracy of transcribed text, enable translations, and generate real-time, human-like responses.

Text-to-Speech (TTS) – Converts processed text back into speech with natural intonation, pitch, and expression, making AI-generated voices sound lifelike.

How It Became a Game-Changer for BFSI and Contact Centers

BFSI (Banking, Financial Services, and Insurance) sectors and global contact center began adopting to automate customer conversations and superior customer experiences.

The technology became a critical tool in transforming customer service operations by enabling efficient, scalable, and personalized interactions.

This technology allowed to automate routine inquiries, provide real-time assistance, and deliver more accurate, human-like responses at scale.

In the BFSI sector, it empowered financial institutions to enhance customer experiences by handling voice-based banking queries, offering multilingual support, and streamlining account services and loan-related inquiries.

In contact centers, Agentic AI integrated into agent workflows to assist with real-time guidance, automate call summaries, and help manage peak call volumes efficiently. The result was improved agent productivity, reduced operational costs, and a higher quality of service.

The widespread adoption of AI agents and Agentic AI has proven to be a game-changer, improving operational efficiency, ensuring compliance, and delivering personalized customer interactions that scale effectively across industries.

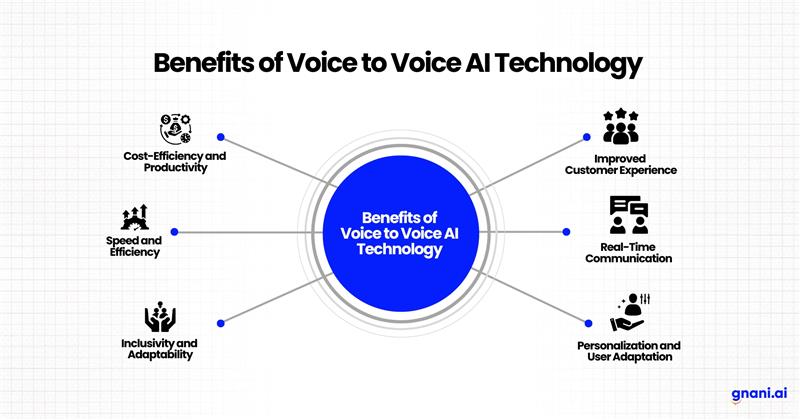

What are the Benefits

1.Cost-Efficiency & Productivity: Automates repetitive voice interactions, reducing the need for human agents, optimizing workflows, and allowing employees to focus on complex, high-value tasks.

2.Speed and Efficiency: it enables real-time AI voice Generator and transformation, streamlining processes like customer support, content creation, and multilingual communication.

3.Improved Customer Experience: Provides faster, more accurate, and natural-sounding responses, enhancing user satisfaction and engagement in voice-driven applications.

4. Real-Time Communication – Enables instant speech translation and response generation, making conversations seamless and reducing delays in industries like customer service, healthcare, and global business.

5. Personalization & User Adaptation – Adapts to individual users by analyzing speech patterns, tone, and preferences, making interactions more engaging and relevant in virtual assistants and customer support.

Why It’s is a Game changer?

Natural & Efficient Communication: Enables real-time, fluid interactions without typing or reading.

AI Voice Generator: Creates highly realistic, human-like speech for lifelike conversations.

AI Voice Changer: Adjusts tone, pitch, and accent for personalized and engaging interactions.

Contextual Awareness: Detects tone, emotion, and intent, Scenario based conversations.

Enhanced User Experience: Faster and more intuitive than traditional input methods.

Future of AI Interaction: Transforms technology into conversational partners, making human-AI communication more seamless and natural.

Application of Voice to Voice AI

The technology isn’t just about making virtual assistants sound more human—it’s transforming industries in powerful ways. Let’s explore some of its most impactful applications.

From customer support to BFSI and even security, this technology is reshaping communication. Businesses are leveraging voice AI to enhance customer experience (CX), enabling contact center agents to modify their tone, accent, or even language in real-time. This improves clarity, makes interactions more engaging, and removes language barriers, allowing companies to hire talent from diverse regions.

In the Banking, Financial Services, and Insurance (BFSI) sector, voice AI is transforming customer interactions, fraud detection, and authentication. AI-driven voice assistants handle banking queries, process transactions, and provide financial advice, offering 24/7 support without human intervention.

As the technology continues to evolve, its potential applications will only expand, making communication more seamless, secure, and efficient across industries.

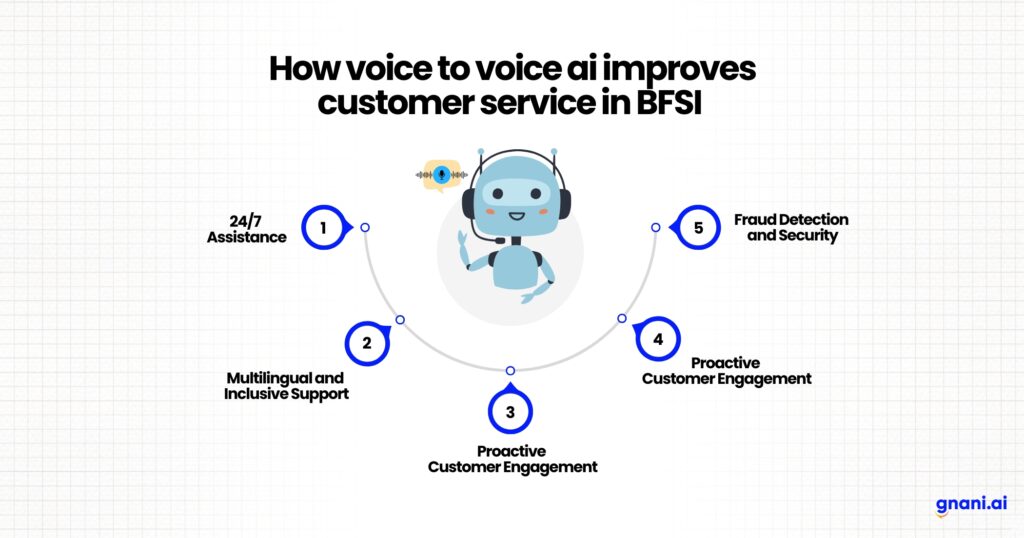

How it improves customer service in BFSI

1. Instant, 24/7 Assistance

- It provide round-the-clock customer support without human intervention.

- Customers get immediate responses to queries about account balances, transactions, loans, and policies.

2. Multilingual and Inclusive Support

- Supports multiple languages and dialects, making banking services more accessible to diverse customers.

- Helps to customers who are not comfortable with text-based interactions.

3. Proactive Customer Engagement

- It initiate proactive touchpoints by providing reminders, updates, and cross-sell or upsell opportunities based on predictive analytics.

- This strengthens customer relationships and boosts revenue.

- 4. Fraud Detection & Security

- It can analyze voice patterns and detect anomalies to prevent fraud in real-time.

- Voice biometrics ensure secure authentication, reducing the risk of identity theft.

- 5. Cost Efficiency

- Reduces the need for large customer service teams, cutting operational costs.

- AI-powered automation minimizes human errors and increases efficiency.

Best Voice to Voice AI solutions for BFSI

AI-Powered Voice Agents

- AI-driven voice agents handle customer inquiries, transactions, and support without human intervention.

- They offer real-time assistance for balance checks, loan applications, and insurance claims.

- Example: A customer can say, “What’s my account balance?” and get an instant response via this technology.

Voice AI for Tailored Insurance & Policies

- AI-driven voice agents analyse customer profiles and suggest the best insurance plans based on lifestyle, financial goals, and risk factors.

- Customers can inquire about coverage details, renewal dates, and benefits through voice AI without waiting for an agent.

- Example: A customer can say, “What’s the best health insurance for a family?” and AI will suggest a plan tailored to their needs.

Multilingual Support for BFSI

- Enables banking and financial services in multilingual support, enhancing accessibility.

- Helps rural, elderly, and less tech-savvy populations access banking and insurance services through voice commands.

- Converts customer queries from one language to another, ensuring seamless communication.

- Example: A customer in India can inquire about a loan in Hindi (“मुझे लोन चाहिए“) and receive a response in the same language.

The Future

The future of voice-to-voice AI is poised to transforms communication, making human-machine interactions more seamless and natural. With advancements in deep learning, large language models (LLMs), and real-time speech synthesis, it will soon be capable of engaging in fluid, context-aware conversations that closely mimic human speech. Emotional intelligence will allow it to adopt different tones, accents, and even emotions, making interactions more personalized and engaging. Real-time translation will break language barriers, enabling effortless global communication.

Businesses will integrate the technology into customer service, virtual assistants, and smart devices, reducing the need for text-based interactions. the technology will also benefit accessibility, empowering individuals through real-time voice augmentation and assistance. As models become more sophisticated, ethical concerns around deepfake voices, privacy, and misuse will need regulation to prevent fraud and misinformation. However, with responsible development, voice-to-voice AI will redefine the way we interact with technology, making it more intuitive, efficient, and human-like than ever before.

Conclusion

The technology is reshaping the way we communicate, offering seamless, real-time voice interactions that enhance accessibility, efficiency, and personalization. From its early development in speech recognition to the advanced Large Language Models of today, this technology has evolved to provide natural, human-like conversations across various industries.

Its applications in customer service, multilingual communication, fraud prevention, and personalized user experiences make it a game-changer, particularly in sectors like BFSI. As the technology continues to advance, it will further bridge communication gaps, improve security, and redefine human-AI interactions. By focusing on transparency, privacy protection, and ethical AI development, this technology will continue to enhance human interactions, breaking language barriers and creating more personalized and accessible experiences for everyone.

FAQ’s

Can Voice-to-Voice AI recognize emotions?

Advanced systems can analyze vocal cues like tone and speed to detect emotions and respond empathetically, enhancing user experience.

What is the role of large language models (LLMs)?

LLMs enhance natural language understanding, real-time processing, and contextual accuracy, improving the fluency and relevance of AI-driven voice interactions.

What industries will benefit the most?

Industries such as Banking, finance, customer service, Contact center, Insurance leverage it for seamless and personalized communication.

What are the technologies used?

ASR converts speech to text using phonemes, voice patterns, and accents for transcription, virtual assistants, and commands.

LLMs refine text, ensure accuracy, enable translation, and generate human-like responses.

TTS converts text to natural speech with realistic intonation and expression.

Does it support multiple languages?

Yes, many advanced systems can process and respond in multiple languages, making them ideal for global communication and translation applications.

What is the future ?

The future includes even more natural-sounding AI voices, improved real-time language translation, emotional recognition, and deeper personalization for individual users.

Explore how it can transform your customer interactions and streamline your business processes. Book a personalized consultation today!