Voice AI is rapidly changing the businesses, especially those in the Banking, Financial Services, and Insurance (BFSI) sector, interact with customers. The global voice-based customer service market is predicted to reach a great height, highlighting the increasing adoption and potential of voice agents to improve customer engagement and drive conversions.

The History of Voice AI

Voice AI has evolved significantly since its inception in the early 20th century. The journey began in the 1920s with Bell Labs’ “Audrey,” which could recognize a limited set of spoken digits. The 1950s saw advancements like IBM’s “Shoebox,” capable of understanding 16 words. By the 1960s, continuous speech recognition systems emerged, thanks to the introduction of the Hidden Markov Model, which improved accuracy.

The 1970s and 1980s marked further progress as voice recognition technology integrated into telephone systems, allowing for automated call routing. The 1990s brought customer-friendly applications such as Dragon NaturallySpeaking, while the 2000s introduced virtual assistants like Siri and Google Voice Search, transforms customer interaction with technology.

Enhancements driven by Gen AI and LLM’s, enabling more refined voice AI systems. As this technology continues to develop, it is becoming an essential part of daily life, making interactions with devices more intuitive and efficient. Understanding this history is essential for leveraging it effectively.

What is Voice AI?

Voice AI is an advanced technology that enables machines to understand, interpret, and respond to human speech. It combines key components such as speech recognition, which converts spoken language into text. Speech to Speech(LLMs), which comprehends the context and intent behind the words. voice synthesis, or text-to-speech (TTS), which transforms written text back into spoken language.

It has numerous applications across various sectors. In virtual assistants like Amazon Alexa and Google Assistant, customers can perform tasks using voice commands, enhancing convenience. In customer service, enabled voice agents automate interactions, reducing wait times and operational costs. Additionally, automotive systems allow drivers to control features hands-free, enhancing safety.

It helps to enhance customer experience, increases efficiency, and improves accessibility for individuals with disabilities. As this technology evolves, it continues to transform how we interact with devices and each other in the digital landscape.

Why Voice AI is Important?

This technology plays a crucial role in modern communication and interaction, offering numerous benefits that enhance customer experience and operational efficiency across various sectors.

Improved Accessibility: It significantly enhances customer accessibility for individuals with disabilities by enabling them to interact with technology using natural speech. This capability allows customers to access information and services more easily, breaking down barriers that traditional interfaces may impose.

consistent Customer Experience: By providing a simpler way to interact with devices, It creates a consistant customer experience. customers can engage with technology through voice commands, making tasks like managing smart home devices or accessing customer support faster and more efficient.

Reduction in operational cost: AI-powered voice agents can lead to over 80% savings compared to using live answering services or hiring staff. Reduce training expenses, and lower infrastructure maintenance costs. Reduces operational costs by automating routine tasks and streamlining operations.

24/7 Availability: It enables businesses to offer round-the-clock customer support, ensuring that customers can receive assistance anytime without delays. This constant availability improves customer satisfaction and loyalty.

Personalized omnichannel marketing: Voice AI is helping retailers offer more personalized experiences across omni channels. From voice-assisted shopping to tailored recommendations, it makes customer interactions smoother, improving engagement and convenience.

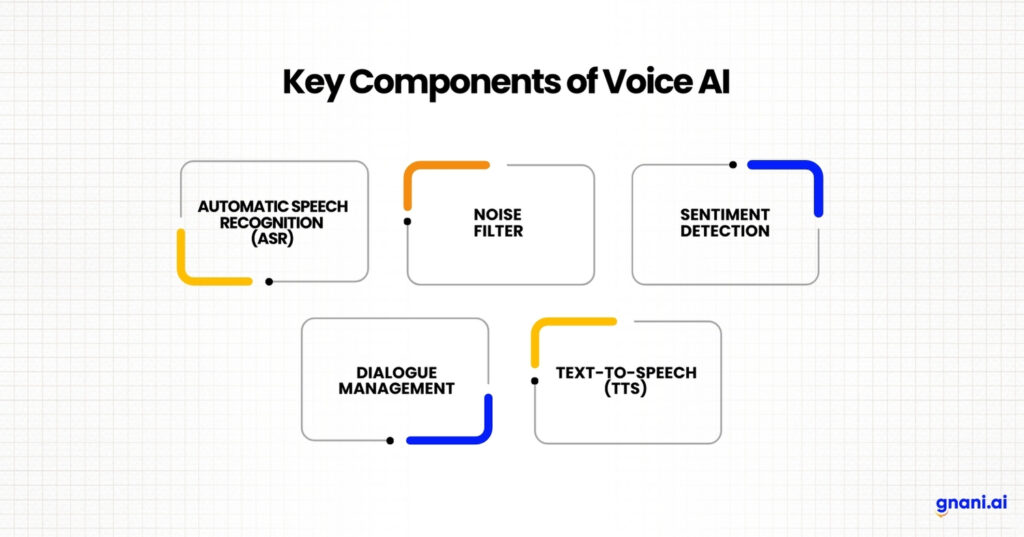

Components of Voice AI

Components of Voice AI

- Automatic Speech Recognition (ASR): Converts spoken language into text. ASR systems analyze audio input, filter out background noise, and identify speech patterns to transcribe spoken words accurately. The key stages in ASR include voice input, signal processing, feature extraction, and pattern recognition.

- Noise Filter: Filters human messages to remove noise or ambiguous glitches. This ensures the message is clear so the voice agent can respond with higher accuracy.

- Sentiment Detection: Analyzes both spoken words and vocal tone to determine a speaker’s emotional state. It combines speech analytics for a deeper understanding. By leveraging Gen AI models it enables businesses to improve customer interactions, optimize decision-making, and enhance user experience.

- Dialogue Management: Acts as the brain of the voice AI system. It maintains the conversation context, and based on the user’s input and current context, it decides the next action.

- Text-to-Speech (TTS): Converts written text into spoken words. TTS systems generate human-like speech by synthesizing audio waveforms from text input. The main steps in TTS include text analysis, phonetic transcription, prosody generation, and audio synthesis.

Understanding Voice AI in BFSI

Voice AI is transforming the financial services landscape by enabling seamless interactions between customers and financial institutions. This technology utilizes LLMs, TTS, and automatic speech recognition to interpret and respond to spoken commands, making financial management more accessible and efficient.

The primary applications in finance is through virtual agents. These AI-powered tools allow customers to perform various tasks, such as checking account balances, transferring funds, and managing investments, simply by using their voice. This convenience eliminates lengthy wait times and manual searches for information, enhancing the overall customer experience.

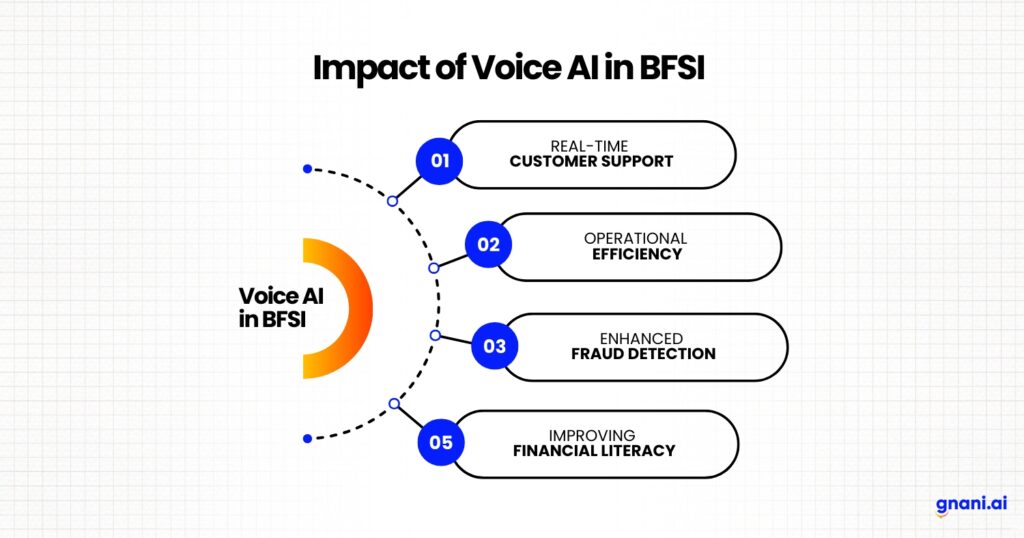

The Impact of Voice AI in BFSI

Voice AI is significantly transforming the financial services sector, enhancing customer interactions and streamlining operations. Here are some key ways it is making an impact:

Real-Time Customer Support: Real-time customer support transforms the way businesses interact with their customers, providing immediate assistance and enhancing overall satisfaction. Voice AI assistants leverage advanced technologies such as automatic speech recognition (ASR) and LLM(Large Language Model) to engage customers effectively.

Operational Efficiency: Automates customer inquiries, reducing manual workload and errors. It enables quick access to critical financial issues through voice commands, enhancing productivity and allowing professionals to focus on strategic decision-making and improves customer satisfaction, making processes more efficient and scalable.

Enhanced Fraud Detection: Automatic Speech Recognition(ASR) technology can analyze speech patterns to identify anomalies that may indicate fraudulent activity. This proactive approach helps protect both clients and institutions from potential losses.

Improving Financial Literacy: Financial institutions are using Voice AI to provide educational content and advice, thereby improving financial literacy among clients. This initiative promotes better understanding and management of personal finances.

Differences Between Voice AI and Traditional AI

| Aspect | Voice AI | Traditional AI |

| Interaction Mode | Operates primarily through spoken language, allowing for natural, intuitive communication. | Relies on text-based inputs or graphical user interfaces, often requiring more structured interactions. |

| Flexibility | Uses advanced Large language models(LLM’s) to understand context, tone, and intent, enabling dynamic conversations. | Often relies on predefined responses and lacks the ability to handle nuanced or complex queries effectively. |

| Learning Capabilities | Continuously learns from customer interactions to improve accuracy and personalization over time. | Typically limited in learning capabilities, relying on static datasets and predefined rules for responses. |

| Applications | Commonly used in virtual assistants, customer service voice agents, and smart home devices requiring real-time interaction. | Widely applied in data analysis, predictive modeling, and automation tasks that do not require voice interaction. |

| Efficiency | Provides 24/7 availability for customer inquiries and can handle multiple simultaneous interactions without human intervention. | May require human oversight and is often limited by staff availability for handling queries or tasks. |

10 Key Metrics to Evaluate Success of voice AI.

-

First Call Resolution (FCR):

Measures the ability to resolve customer issues during the initial interaction. A high FCR indicates efficient processes and quick issue resolution, elevating customer experience. Strive for an FCR rate is 80%. Improving FCR requires continuous training of the AI and enhancements to its knowledge base.

-

OpEx Reduction:

Quantifies the decrease in operational costs achieved by automating routine inquiries and support tasks, reducing the need for a large customer support team. Track savings in labour costs, infrastructure, and other operational overheads. Regularly analyze cost-efficiency gains to optimize resource allocation.

-

Bot Accuracy:

Assesses the accuracy of the voice agent in understanding and responding to customer inquiries. High accuracy ensures customers receive correct information and appropriate assistance. Measure accuracy using metrics like word error rate (WER) and intent recognition rate. The bot accuracy is above 90%. update the bot’s training data to adapt to new language patterns and industry-specific terminology.

-

Improved Efficiency:

Measures how AI automates tasks like updating customer information, creating tickets, and routing users, allowing human agents to focus on complex issues. Evaluate efficiency gains by tracking the number of tasks automated and the time saved. Optimize workflows to ensure seamless integration between AI and human agents.

-

Increased CSAT:

Evaluates user satisfaction with interactions via surveys, feedback, or sentiment analysis. Higher scores mean your AI voice agent is effective. Collect customer feedback immediately after interactions with the voice AI. Use sentiment analysis to gauge the emotional tone of customer feedback and identify areas for improvement.

-

Reduced AHT (Average Handle Time):

Tracks the duration of customer interactions, where shorter times indicate improved efficiency. Analyze AHT trends to identify bottlenecks and optimize AI workflows for faster resolutions. Continuous improvement in AHT can lead to significant cost savings.

-

Latency:

Low latency ensures natural, engaging interactions, enhancing user satisfaction and efficiency, particularly in real-time applications. By optimizing for speed with efficient models, businesses can drive customer loyalty, reduce operational costs and improve overall usability.

-

Call Abandonment Rate:

Percentage of customers who abandon tasks before completion, which can indicate engagement issues. Monitor call abandonment rates to identify pain points in the customer experience. Analyze abandonment patterns to pinpoint areas for improvement in the voice AI’s ability to handle complex or frustrating situations.

-

NPS(Net promoter Score):

Measures customer loyalty and the likelihood of customers recommending a product or service. Declining NPS may reflect poor voice assistant performance, where customers are becoming frustrated due to frequent misunderstandings or a general feeling that they are not being supported. Reduce call wait times by automating simple, high-volume inquiries, design natural-sounding automated solutions, and ask for feedback from agents and customers to improve service and increase NPS.

Role of Gnani.ai

Gnani.ai, a leader in deep tech innovation, has been at the forefront of transforming voice AI with its cutting-edge technologies. With expertise in Automatic Speech Recognition (ASR), Text-to-Speech (TTS), Speech-to-Speech Models, and Industry-Specific Small Language Models (SLMs), Gnani.ai delivers unparalleled AI solutions tailored for the Banking, Financial Services, and Insurance (BFSI) sector. Serving over 200 clients, Gnani.ai’s proprietary AI stack enhances customer interactions, optimizes agent efficiency, and ensures seamless, human-like conversations across all customer touchpoints.

Automate365 : The solution enables the automation of both outbound and inbound customer interactions across multiple channels, including voice, chat, and email. Banks can leverage this LLM-based technology to handle customer support calls and execute automated marketing campaigns. It enhances First Call Resolution (FCR), offers multilingual support, and improves scalability and flexibility.

Assist365: This GenAI-powered solution acts as a co-pilot, providing real-time guidance to agents during customer interactions. It suggests knowledge base articles, scripts, and next-best actions, helping reduce Average Handle Time (AHT). Additionally, it supports agents with automatic note-taking and summarization, enhancing efficiency and accuracy..

Aura365: This solution analyzes customer interactions across channels to identify trends, pain points, and opportunities for improvement. It ensures 100% compliance tracking and includes features like sentiment analysis, automated quality assurance, and post-facto speech analytics. Designed for scalability and adaptability, it meets evolving business needs and supports over 40 languages.

The Future of Voice AI

The future of voice AI is poised for remarkable advancements, driven by innovations in LLMs, SLMs, Speech to Speech AI voice recognition, and integration with smart devices. By 2025, we can expect AI voice assistants to engage in more nuanced and contextually aware conversations, moving beyond simple command-response interactions to anticipate user needs and preferences. This evolution will be facilitated by enhanced LLMs capabilities that allow these systems to understand complex queries and respond in a more human-like manner, significantly improving user experience and satisfaction.

Additionally, the rise of multilingual support in voice assistants will cater to an increasingly global user base. Future voice technologies will not only understand multiple languages but also adapt to cultural nuances, making them invaluable tools for businesses operating in diverse markets. Emotional intelligence is another exciting frontier; future AI assistants will analyze vocal tone and emotion to respond empathetically, enhancing their utility in sensitive environments like healthcare

Conclusion

Voice AI is transforming customer interactions across industries, particularly in the BFSI sector, by enabling seamless, efficient, and intelligent conversations. From its early beginnings to its current state of advanced ASR, TTS, and LLMs technologies, Has evolved into a game-changing tool that enhances customer experience, improves accessibility, and drives operational efficiency.

The adoption of Gen AI-driven voice agents, real-time analytics, and automated customer support is paving the way for a smarter and more scalable future.

As technology advances, the future of Voice AI will bring even more personalized and human-like interactions, multilingual capabilities, and seamless integration with ASR and LLMs. Companies that embrace these innovations will not only enhance customer engagement but also gain a competitive edge in the rapidly evolving digital landscape.

The key to success lies in continuously refining AI capabilities, monitoring key performance metrics, and adapting to new trends. It is not just the future—it is the present, shaping how businesses communicate, serve, and grow in an Gen AI-driven world.

FAQ’s

What is voice AI?

Voice AI uses speech recognition to understand and interpret spoken language, allowing customers to resolve inquiries through natural conversation4. It adapts dynamically to user inputs, offering seamless, natural, and context-aware interactions that mimic human communication.

What is the difference between voice AI and speech recognition?

Speech recognition transcribes spoken words into written text. Voice AI understands spoken language and can generate human-like responses.

What are the applications of Voice AI?

It is used in:

- Customer Support – AI voice agents handle inquiries, complaints, and FAQs.

- Virtual Assistants – Examples include Alexa, Siri, and Google Assistant.

- Contact Centers – Enhances agent performance with real-time insights.

- Sales & Marketing – Engages customers with personalized voice interactions.Can Voice AI detect emotions?

Yes, It uses sentiment analysis and voice analytics to detect emotions like frustration, happiness, or urgency based on speech patterns and tone.

What are the benefits of using Voice AI?

- Reduces operational costs with automation.

- Improves customer experience with quick and accurate responses.

- Enhances decision-making through speech analytics and insights.

What is the future of Voice AI?

The future includes more human-like conversations, multilingual capabilities, real-time sentiment adaptation, and deeper integration with business ecosystems for seamless automation.